From biology to formalization

motivation, philosophy, history and realization of neural models

Chapter 1 – Introduction, motivation and history

1.1 Why neural networks?

There are problem categories that cannot be formulated as an algorithm. Problems that depend on many subtle factors, for ex- ample the purchase price of a real estate which our brain can (approximately) calculate. Without an algorithm a computer cannot do the same. Therefore the question to be asked is: How do we learn to explore such problems?

Exactly – we learn; a capability computers obviously do not have. Humans have a brain that can learn. Computers have some processing units and memory. They allow the computer to perform the most complex numerical calculations in a very short time, but they are not adaptive.

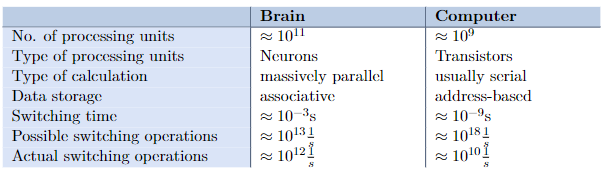

If we compare computer and brain, we will note that, theoretically, the computer should be more powerful than our brain: It comprises 10^9 transistors with a switching time of 10^−9 seconds. The brain contains 10^11 neurons, but these only have a switching time of about 10^−3 seconds.

The largest part of the brain is working continuously, while the largest part of the computer is only passive data storage. Thus, the brain is parallel and therefore performing close to its theoretical maximum, from which the computer is orders of magnitude away (Table 1.1). Additionally, a computer is static – the brain as a biological neural network can reorganize itself during its “lifespan” and therefore is able to learn, to compensate errors and so forth.

Table 1.1: The (flawed) comparison between brain and computer at a glance. Inspired by: [Zel94]

Within this text I want to outline how we can use the said characteristics of our brain for a computer system.

So the study of artificial neural networks is motivated by their similarity to successfully working biological systems, which – in comparison to the overall system – consist of very simple but numerous nerve cells that work massively in parallel and (which is probably one of the most significant aspects) have the capability to learn. There is no need to explicitly program a neural network. For instance, it can learn from training samples or by means of encouragement – with a carrot and a stick, so to speak (reinforcement learning).

One result from this learning procedure is the capability of neural networks to generalize and associate data: After successful training a neural network can find reasonable solutions for similar problems of the same class that were not explicitly trained. This in turn results in a high degree of fault tolerance against noisy input data.

Fault tolerance is closely related to biological neural networks, in which this characteristic is very distinct: As previously mentioned, a human has about 1011 neurons that continuously reorganize themselves or are reorganized by external influences (about 105 neurons can be destroyed while in a drunken stupor, some types of food or environmental influences can also destroy brain cells). Nevertheless, our cognitive abilities are not significantly affected. Thus, the brain is tolerant against internal errors – and also against external errors, for we can often read a really “dreadful scrawl” although the individual letters are nearly impossible to read.

Our modern technology, however, is not automatically fault-tolerant. I have never heard that someone forgot to install the hard disk controller into a computer and therefore the graphics card automatically took over its tasks, i.e. removed conductors and developed communication, so that the system as a whole was affected by the missing component, but not completely destroyed.

A disadvantage of this distributed fault- tolerant storage is certainly the fact that we cannot realize at first sight what a neural neutwork knows and performs or where its faults lie. Usually, it is easier to per- form such analyses for conventional algorithms. Most often we can only transfer knowledge into our neural network by means of a learning procedure, which can cause several errors and is not always easy to manage.

Fault tolerance of data, on the other hand, is already more sophisticated in state-of- the-art technology: Let us compare a record and a CD. If there is a scratch on a record, the audio information on this spot will be completely lost (you will hear a pop) and then the music goes on. On a CD the audio data are distributedly stored: A scratch causes a blurry sound in its vicinity, but the data stream remains largely unaffected. The listener won’t notice anything.

So let us summarize the main characteristics we try to adapt from biology:

- Self-organization and learning capability

- Generalization capability and

- Fault tolerance.

What types of neural networks particularly develop what kinds of abilities and can be used for what problem classes will be discussed in the course of this work.

In the introductory chapter I want to clarify the following: “The neural network” does not exist. There are different paradigms for neural networks, how they are trained and where they are used. My goal is to introduce some of these paradigms and supplement some remarks for practical application.

We have already mentioned that our brain works massively in parallel, in contrast to the functioning of a computer, i.e. every component is active at any time. If we want to state an argument for massive parallel processing, then the 100-step rule can be cited.